Recently, i’m very busy because i have some projects and problems need to resolve, one of my problems is how to improve availability of system ?. I began to find solutions for this problem and i focus on “Load balancing” for system with HaProxy, Redis with Socket.IO to build load balancer system. As you know HaProxy is very fast and reliable solution offering high availability, load balancing, and proxying for TCP and HTTP-based applications. Some test cases about performance of HaProxy, it so great and it can achieve more 2 million concurrent connections (base on TCP connection). HaProxy appears in many big systems as game-online or banking system that supports load balancing for millions of users and real-time connections. Today i will guide how to install, setup and deploy HaProxy to “load balancing” for multiple nodes run as “server socket.io”. Here i will make a small demo with nodejs-server and socket.io-client.

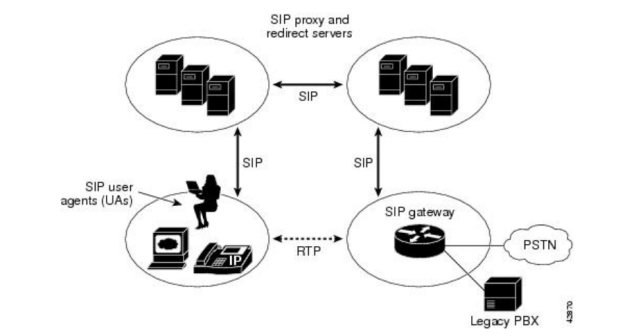

I will test follow the below model

I have four servers with

I have four servers with

Test1 : 4 core, 16GB Ram

Test2 : 4 core, 16GB Ram

Test3 : 6 core, 32GB Ram

Test4 : 4 core, 16 GB Ram

Ok, let go to build simple example to test HaProxy

Step 1 : Install HaProxy on Test4 server

* yum install haproxy

Step 2 : Open configuration file “/etc/haproxy/haproxy.cfg” and add the below content

frontend http-in

bind *:9199

default_backend socketio-nodes

stats enable

stats uri /?stats

stats auth admin:admin

backend socketio-nodes

option forwardfor

option http-server-close

option forceclose

no option httpclose

balance url_param session_id check_post 64

cookie SID insert indirect nocache

server node1 test1.azstack.com:9100 weight 1 maxconn 1024 check cookie node1

server node2 test2.azstack.com:9100 weight 1 maxconn 2048 check cookie node2

server node3 test3.azstack.com:9100 weight 2 maxconn 4096 check cookie node3

You need to note a few configuration:

balance url_param session_id check_post 64 is selection criteria for load distribution.

weight 1 : weight of very nodes.

maxconn 1024 : max concurrent connect on the node.

Step 3 : Start HaProxy

* /usr/sbin/haproxy -f /etc/haproxy/haproxy.cfg

Endpoint of HaProxy : test4.azstack.com:9199

Step 4 : Create simple socket.io server and listen on port 9100

https://github.com/kimxuyenthien/socketio-demo/blob/master/server

duplicate socket.io server instance for the other two nodes and run it

* node socketio-server.js

Step 5 : Create simple websocket client to connect.

https://github.com/kimxuyenthien/socketio-demo/tree/master/client

Step 6 : Try to connect to HaProxy (Invite your friends join to test)

HaProxy endpoint (test4.azstack.com:9199)

Step 7 : Check log on socket.io server

my connection

and more connection from my friend

Ok, you can see ip client allway is 128.199.123.119, that great because all packet is forwarded by HaProxy.

Some advance topics for you

- How to setup SSL for HaProxy.

- How to use Redis to run cluster socket.io server.

Maybe i can write above problem, thank for reading. Have a nice day.